FSI Deploys Service Mesh-based Prototype to Navy Lab

Article by Dale McIntosh

FSI deployed a Service-Mesh based prototype to a San Diego-based Navy lab. The prototype is a geospatial visualization subsystem that delivers an innovative capability to visualize alerts, events and other conditions of interest to the warfighter to facilitate rapid decision making. This system was initially developed as a component of the Navy’s research into tactical cloud environments sponsored by the Office of Naval Warfare (ONR).

FSI demonstrated its leadership on the cutting edge of military software development and cloud deployments with the adoption of a containerized microservice mesh architecture for the prototype. We wanted to avoid the “lift and shift” approach generally taken when adopting monolithic applications to a microservices architecture. The transition to cloud-native microservices began with a breakdown of the prototype’s monolithic applications into service level components. Then we developed and tested our deployments in Docker, Kubernetes, and OpenShift. Finally, we created our service-mesh by adding sidecar proxies for each microservice, an API gateway with Ambassador, identity management with Keycloak, and service discovery from Consul. The most important step in this process was the successful onboarding of our team with Docker and having the willingness to learn new technologies.

Our team is made up of continuous learners who saw that the benefits of the microservice architecture outweighed the added system complexity. We each initially created a Dockerfile which assembles a microservice’s code and dependencies into a docker image. Then we learned how to use Docker-Compose to orchestrate local deployments of our Docker images into running containers. At this stage we started to incorporate Docker volumes to persistently store any stateful information located within our ephemeral docker containers. Now that we had a running microservice based application we needed to optimize the deployment of our containers for the cloud.

Taking our deployments to the next level with Kubernetes taught us how to get our containers running in multiple environments with minimal maintenance. In a team effort, our software developers broke out as many variables as possible and placed them into Kubernetes ConfigMaps. These configuration mappings allowed us to use the same docker images in multiple environments and switching between them only required us to change a single file at the container orchestration level. We also took advantage of Kubernetes container readiness and health checks. If any container fell out of our spec of a healthy container, then Kubernetes would automatically recreate it for us. Now that we had a cloud deployable microservice application we needed a Continuous Integration / Continuous Development (CI/CD) pipeline.

OpenShift gave us the flexibility to setup a Jenkins and SonarQube pipeline alongside our running containers. We created OpenShift BuildConfigs that would spin up ephemeral Jenkins containers that contained all the necessary build tools to compile and test our code. With a click of a button, Jenkins would pull the latest version of our code, compile, test, and send the code to SonarQube for static code analysis. The SonarQube scan results were then published to be reviewed by our developers. Jenkins would then send the compiled code to OpenShift to be built into a docker image. Once OpenShift had built latest docker image for our service it would then trigger a new rollout of our container. With zero downtime, OpenShift would spin up a new container from our latest built image and would wait until it was ready to be rolled out. Once ready, OpenShift would shift the load balancer to send incoming requests to use our latest container while it was shutting down our old one.

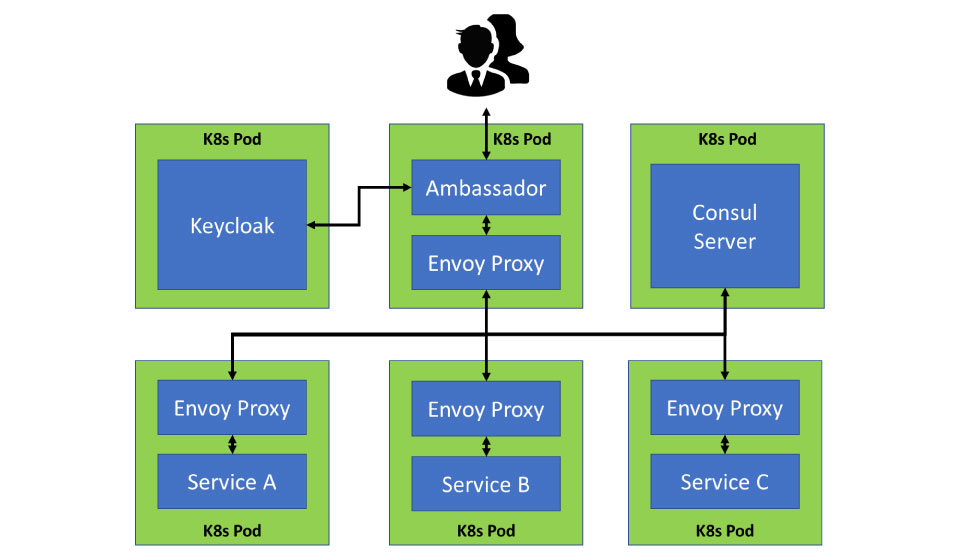

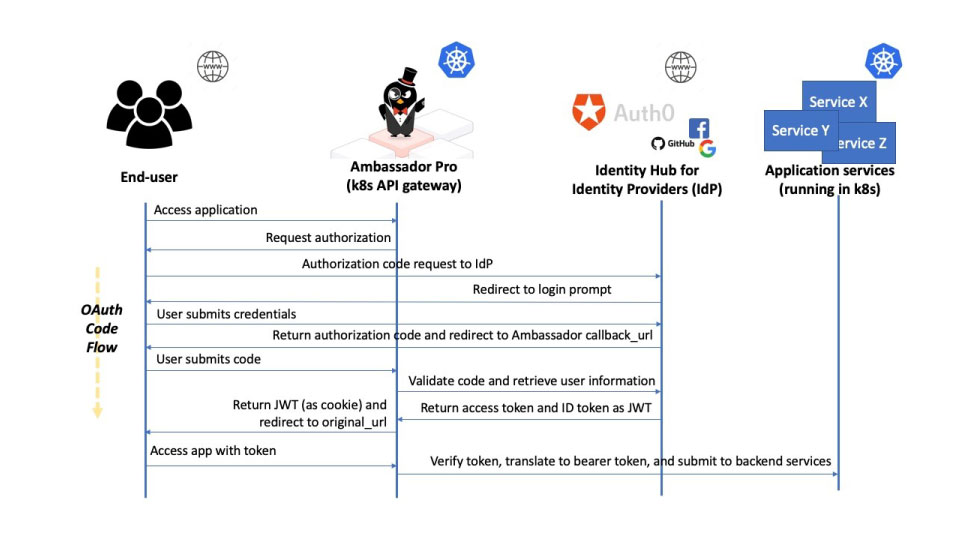

With functional microservices, and a CI/CD pipeline to support them, we were then able to implement our service mesh architecture. We created the service mesh by adding an Ambassador API gateway at the edge and integrated it with Keycloak for identity management. Then we added Envoy sidecar proxy containers running next to each of our microservices and a Consul server to the service mesh. Ambassador and its own sidecar would be our front proxy, and the microservices’ sidecars would register themselves to Consul’s service discovery (see Figure 1). This allowed us to deploy our application across multiple nodes in a Kubernetes or OpenShift cluster, and Consul would always know where to route our client’s requests from Ambassador to a running microservice in the cloud. Furthermore, this setup gave us the ability to provide authentication and end-to-end encryption from the edge to the sidecar, and between sidecars, without changing any of the behavior of our actual microservice application (see Figure 2). FSI worked with Navy civilian personnel to install the service mesh architecture in an experimentation lab and will assist the other component contributors in configuring their software to enable full system functionality.

---

Figure 1: Simplified Microservice mesh architecture.

Figure 2: Ambassador Pro Authentication and Authorization Sequence Diagram [1]

---

Dale McIntosh is the Chief Technology Officer at Forward Slope Inc. since 2003. Mr. McIntosh has over 35 years of software/system development experience, the last 19 years of his career has been focused on the development of Command Control Communication Computers Intelligence (C4I) systems for the U.S. Navy. Most recently, Mr. McIntosh has been the principal investigator for several project developing advanced Common Operational Picture (COP) visualization technologies and meteorological and oceanographic (METOC) systems in support of the Office of Naval Research (ONR) and Program Executive Office (PEO) C4I

References:

[1] OAuth and OIDC Overview. (n.d.). Retrieved December 27, 2019, from https://www.getambassador.io/concepts/auth-overview/.